Despite billions invested in AI development, coding agents remain handcuffed by primitive tooling. The proliferation of AI stacks continues, yet none have been purpose-built to empower coding agents to truly excel.

Today, these agents face two critical challenges. First, agents are coding as a human would be in Notepad—without the help of an IDE. This creates inefficiencies: more iterations, higher token consumption, and inconsistent outcomes. Second, most agents lack the ability to manipulate infrastructure—what we call “AI Workspaces”—to test and validate code dynamically. Instead, they are mostly limited to basic file operations like reading and writing code, without the ability to execute, test, or validate their outputs in real environments. This lack of runtime context severely impacts their effectiveness.

My previous article 'Agent-Agnostic Middleware Infrastructure' explored the importance of enabling agents to work beyond file manipulation and utilize dynamic workspaces for testing and refinement. Without such capabilities, AI agents remain hindered, unable to validate their work or guarantee successful outcomes.

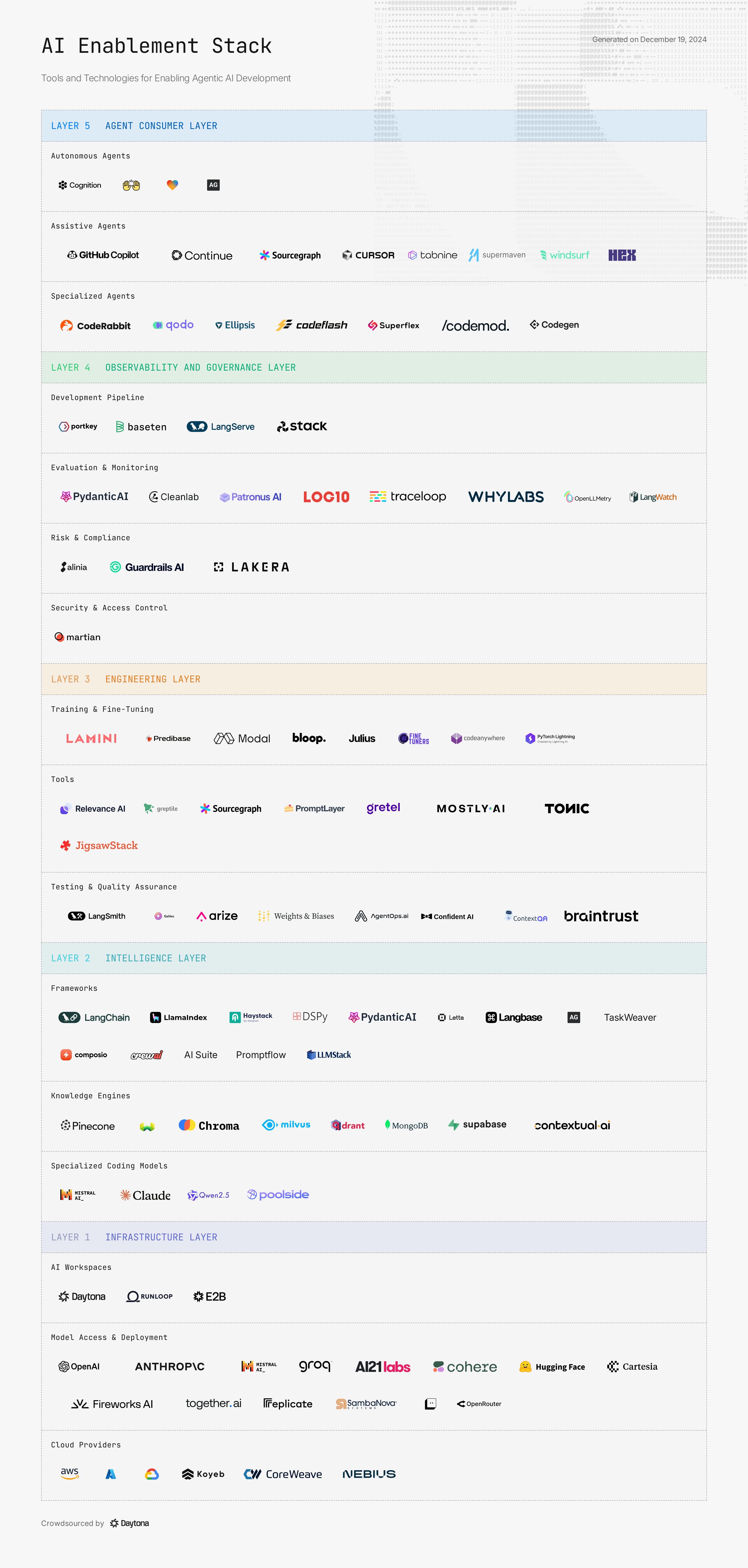

To address these challenges, we've mapped out the AI Enablement Stack. This comprehensive taxonomy categorizes the essential tools and platforms needed for AI agents to operate effectively while highlighting critical gaps in today's ecosystem that must be filled for coding agents to reach their full potential.

Why an AI Enablement Stack?

Most existing AI stacks take the form of lists—collections of companies grouped under common titles, often to outline competitive landscapes rather than to map solutions for specific use cases. These stacks provide some clarity but fail to address the practical requirements of building AI systems for real-world applications.

We see a future where both companies and individuals will create their own AI agents, tailored for diverse use cases. These agents may solve internal challenges, or they might scale into larger systems that enhance customer experiences, making products or services more effective and easier to use.

Our thesis is simple: the world is moving toward a highly agentic future. As this shift unfolds, it’s crucial to map out all the building blocks required to create capable, production-ready AI agents. This benefits not only the vendors currently listed but also future consumers of these tools, providing clarity in a space where new companies and technologies emerge daily, helping them understand what's available to realize their agentic ambitions.

The AI Enablement Stack, co-created by myself and Nikola Balic, was designed to provide exactly this—a five-layer structure that builds from foundational infrastructure up to the consumer-facing AI agents that deliver real-world value. Each layer provides critical capabilities that, when combined, unlock an AI agent's full operational potential.

Contributing to the AI Enablement Stack

The AI Enablement Stack is maintained as code in our public GitHub repository. You can contribute directly through a pull request if you notice a missing vendor or need to update any information. Once approved, the Stack visualization and README.md list automatically regenerate to reflect the changes, ensuring it always stays current.

Understanding the Five Layers

The AI Enablement Stack comprises five interconnected layers that provide the complete foundation for AI agent development. Starting with infrastructure and progressing through intelligence, engineering, and governance, each layer enables critical capabilities that deliver value through consumer-facing AI agents. Let's explore each layer's role.

Layer 1 - Infrastructure: Starting from the Foundation

At the base of the AI Enablement Stack lies the Infrastructure Layer, the backbone that powers all AI development and deployment. This layer comprises three essential components: Cloud Providers, Model Access, and AI Workspaces, which ensure that AI systems can be built, tested, and deployed efficiently.

Cloud Providers offer scalable resources for training and running AI models. We have examples such as Google Cloud, with its specialized hardware like TPUs and AI pipeline tools, and companies like CoreWeave, which leverage Nvidia GPUs.

Building on this foundation is Model Access, which ensures interaction with and deployment of AI models. Cohere provides an API for accessing advanced language models, enabling developers to integrate capabilities directly into their applications. With its extensive library of pre-trained models and tools, Hugging Face enables developers to deploy and fine-tune AI systems.

Finally, at the top of this layer are AI Workspaces, where developers and AI agents interact to create and refine AI systems. Daytona, for example, provides AI workspace management that supports agentic workflows for individuals or large-scale enterprises.

Layer 2: Intelligence: The Cognitive Core

At the center of the AI Enablement Stack lies the Intelligence Layer, the cognitive core that drives AI systems. This layer encompasses three essential components: Frameworks, Knowledge Engines, and Specialized Models, which collectively power the processing, decision-making, and information retrieval.

Specialized Models bring domain-specific expertise to AI systems. Codestral, for example, focuses on generating structured, high-quality code tailored to specific industries, helping enterprises rapidly prototype and deploy software solutions. Poolside Malibu excels at integrating contextual data into AI workflows.

Knowledge Engines focus on information retrieval, organization, and contextual understanding. Pinecone specializes in vector database technology, enabling AI systems to perform efficient and scalable searches across large datasets. Supabase, with its open-source relational database capabilities, complements this by providing structured data storage and fast querying, ensuring AI agents have the right data at their fingertips. Together, these tools enable seamless data handling and retrieval, empowering AI systems to deliver precise, context-aware outputs.

Frameworks provide the foundational tools for structuring AI applications. LangChain has emerged as a key player, enabling developers to integrate multiple AI models and data sources into cohesive workflows. Similarly, PydanticAI simplifies the creation of structured, schema-aware AI interactions, making it easier to manage complex inputs and outputs in intelligent systems.

Layer 3: Engineering - The Developer's Toolkit

The Engineering Layer serves as the developer's toolkit for building AI applications, bridging the gap between raw AI capabilities and production-ready solutions. This layer includes essential tools and resources for training models, developing applications, and ensuring quality through testing, empowering developers to create robust and reliable AI systems.

Testing and Quality Assurance ensure that AI systems function reliably and meet production standards. LangSmith, part of the LangChain ecosystem, offers a powerful debugging and evaluation framework for AI chains, helping developers test and optimize the performance of complex workflows. Coupled with the tracking capabilities of Weights & Biases, developers gain a robust system for diagnosing issues and improving model performance across iterations.

Tools enable seamless orchestration and optimization of AI systems during development. Greptile simplifies the process of extracting, transforming, and managing data for AI workflows, ensuring that models are working with high-quality and context-relevant data. PromptLayer provides a powerful solution for prompt management and debugging, helping developers track changes, experiment with configurations, and optimize interactions between prompts and AI models. These tools ensure that development workflows are smooth, efficient, and well-documented.

Training and Fine-Tuning Tools are at the core of this layer, enabling developers to train and refine AI models. These tools ensure that AI models are efficient, accurate, and optimized for their intended tasks. Bloop and LLM Stack play a pivotal role in fine-tuning and operationalizing AI workflows. Bloop simplifies code search and understanding, providing developers with a powerful tool for navigating and managing large codebases during the AI development. LLM Stack tools enhance the customization and deployment of large language models, streamlining the process of adapting these systems to specific use cases while optimizing their performance in production.

Layer 4: Observability and Governance - The Control Layer

The Observability and Governance Layer is the control layer of the AI Enablement Stack, ensuring AI systems are monitored, evaluated, secured, and governed effectively. This layer includes four essential components: Development Pipeline, Evaluation & Monitoring, Risk & Compliance, and Security & Access Control, which ensure that AI systems operate reliably, transparently, and within organizational standards.

Security and access control ensure that AI systems are protected from unauthorized access and malicious actions. LiteLLM provides lightweight solutions for managing API security, ensuring that sensitive AI workflows are secured and access is controlled effectively.

Risk & Compliance. AI systems must meet ethical and regulatory standards, and this component ensures alignment with both. Guardrails AI integrates guardrails directly into AI workflows to prevent unintended behavior and ensure responsible AI usage. Lakera provides governance tools that assess the ethical implications and risks of AI models, helping teams manage liabilities and adhere to compliance frameworks. These tools mitigate risks and promote accountability in AI development and deployment.

Evaluation & Monitoring. This component tracks AI systems' performance, integrity, and health to prevent drift, anomalies, and performance degradation. Pydantic Logfire provides structured logging and performance monitoring tailored to AI models. WhyLabs offers comprehensive tools for monitoring data quality, helping identify shifts or inconsistencies in real-time. Additionally, TraceLoop facilitates the debugging and evaluation of AI pipelines, providing insights into system behavior and improving reliability. These tools enable teams to maintain system performance and ensure dependable outputs.

Development Pipeline ensures that AI systems are built and deployed efficiently, with tools that streamline model lifecycle management. Portkey enables developers to automate and optimize workflows across different stages of AI application development. Baseten supports fast deployment and management of machine learning models, while LangServe focuses on serving language models reliably and scalably. Together, these tools ensure that AI development remains smooth and adaptable to evolving requirements.

Layer 5: Agent Consumer Layer - The Interface Layer

The Agent Consumer Layer represents the culmination of the AI Enablement Stack, where the power of AI is translated into tangible, user-facing applications. It is at this layer that AI agents, powered by the underlying infrastructure, intelligence, and engineering layers, interact with users and systems to deliver real-world value.

This layer is home to a broad spectrum of agents, ranging from fully autonomous systems capable of operating independently to assistive tools that enhance human productivity and specialized agents tailored to niche tasks. The true power of this layer emerges when agents leverage the capabilities built into the layers below—from infrastructure and intelligence to engineering controls and governance.

Conclusion: Building Tomorrow's Agentic AI Ecosystem

While the examples of agents today are impressive, they represent only the beginning of what is possible. As outlined in our thesis, we envision a future where an infinite number of agents will emerge to address a vast array of use cases. These agents will not only solve highly specialized problems but also empower individuals and organizations to create custom solutions that align with their unique needs.

The Agent Consumer Layer is more than just the interface—it is the realization of the entire AI Enablement Stack’s potential. Every layer below contributes to making these agents functional, reliable, and impactful. As AI agents become integral to software development, their ecosystem will expand in sophistication and practical impact.

Help Shape the Future of AI Development

Help us maintain the most comprehensive view of the AI agent ecosystem—whether by adding missing vendors, updating information, or sharing the AI Enablement Stack with your network, your contribution helps the entire community build better AI solutions. Visit our GitHub repository to get started.