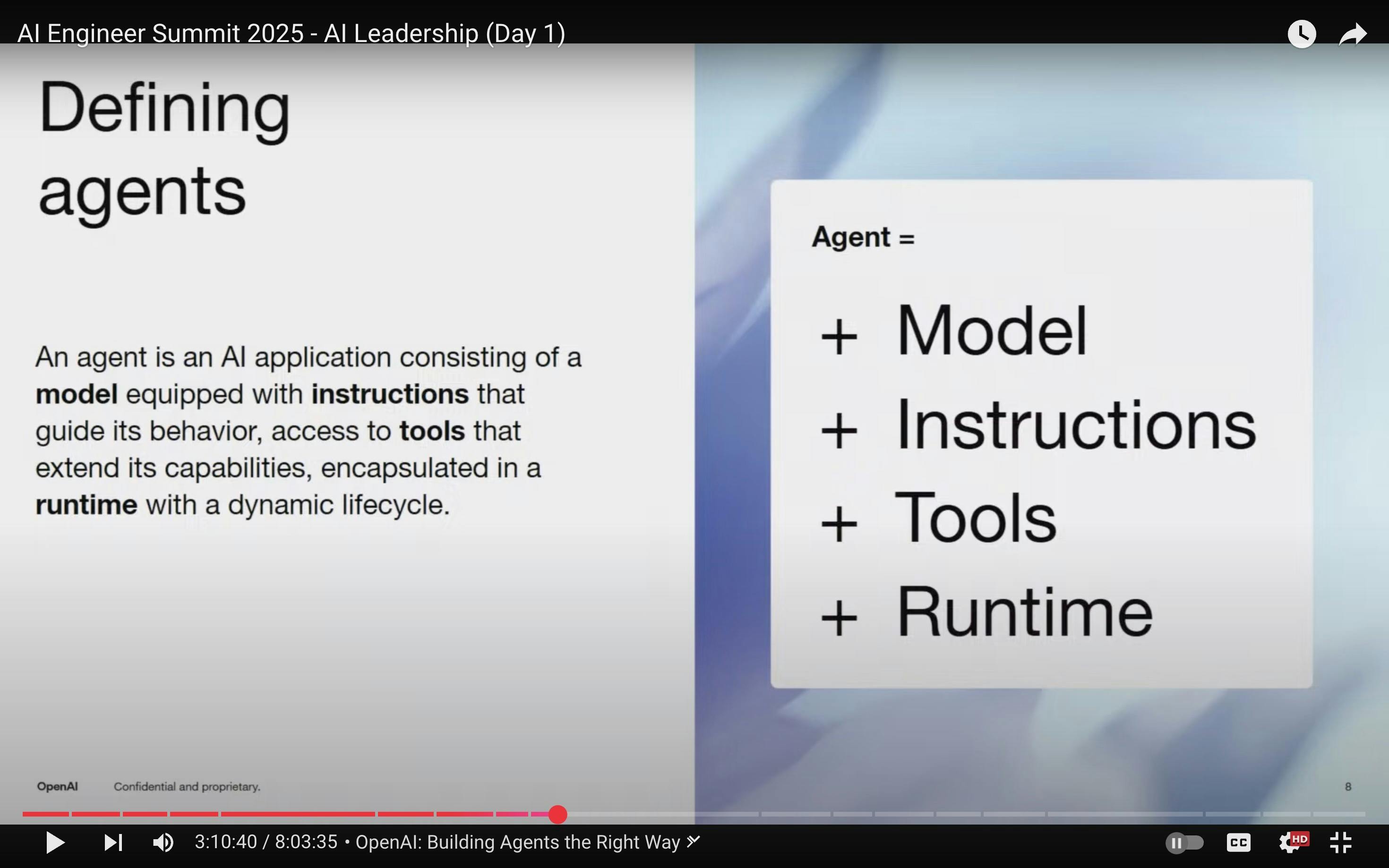

The definition of AI agents is evolving. At yesterday's AI Engineer Summit in NYC, OpenAI redefined agents as more than just models and prompts—they require a runtime with a dynamic lifecycle to operate effectively.

As developers of Daytona, a platform built for secure and scalable AI-driven development, we see this as validation of a critical insight:

AI agents aren't just code—they're stateful applications that need specialized infrastructure.

TL;DR

AI agents now require runtime environments, not just models and prompts

Agents need specialized infrastructure for security and scaling

Dynamic runtimes must handle state, resources, and agent collaboration

Why AI Agents Demand Dynamic Runtimes

1. Flexibility and Scalability

AI agents operate in diverse environments, each with unique requirements and constraints. A dynamic runtime ensures the agent can adapt to varying workloads, scale resources as needed, and maintain optimal performance without human intervention. This flexibility is paramount for deploying AI agents across applications.

2. Security Can't Be Optional

Our experience with the OpenHands project shows that AI agents require several critical security measures. First, they must be properly isolated to prevent unauthorized system access or "AI escape." The system must safely handle AI-generated code execution while implementing strict resource controls for CPU and memory usage. Network access should be managed, and file system access must be tightly controlled through sandboxing.

3. Lifecycle Complexity Exploding

AI agents must respond to real-time data and changing conditions. A runtime with a dynamic lifecycle allows agents to modify their behavior, update models, and integrate new tools seamlessly. Modern agent patterns require:

Multi-agent collaboration

Handoffs between specialized models

Graceful failure recovery

Resource-aware scaling

The New Anatomy of AI Agents

OpenAI's updated definition breaks agents into four core components:

Agent = Model + Instructions + Tools + Runtime

Model: The foundational AI or machine learning model that powers the agent.

Instructions: The guidelines or prompts that steer the agent's behavior.

Tools: External resources or APIs that extend the agent's capabilities.

Runtime: The execution environment that supports the agent's operations with a dynamic lifecycle.

Barry Zhang from Anthropic reinforced this definition during the second day of the AI Engineer Summit. The code snippet on his slide also demonstrates what OpenAI defines as the "runtime with a dynamic lifecycle."

This example demonstrates why traditional static infrastructure is insufficient. These agents need an environment that supports continuous execution, state management, and dynamic tool interaction.

This better reflects how real AI agents work—they're not just static models responding to prompts but dynamic systems interacting with their environment over time. The elegance of this pattern is in its simplicity, which captures the essential elements of agency: perception, decision-making, and action.

Traditional cloud infrastructure wasn't built for these requirements. Let's explore why.

Daytona's Runtime for Agentic Workflows

According to the OpenAI definition, "Runtime with a Dynamic Lifecycle" refers to an operational environment for software applications—particularly complex systems like AI agents—that is adaptable and evolves based on varying conditions, requirements, and feedback.

We built Daytona's runtime specifically for AI workloads. Our platform is designed to support the dynamic lifecycle requirements essential for effectively deploying and managing AI agents.

Key Features of Daytona's Runtime

Intelligent Resource Management

Dynamic Scaling: Automatically provisions and scales environments based on workload demands, optimizing performance and cost-efficiency.

Resource Optimization: Manages CPU, memory, and storage allocation with automated cleanup of unused resources.

Secure Isolation: Operates each AI agent in a sandboxed environment to prevent cross-interference and maintain security.

Adaptive Lifecycle Management

Real-Time Evolution: Enables on-the-fly integration of new models, instructions, and tools.

Performance Intelligence: Continuously monitors agent performance and automatically implements optimizations.

State Management: Maintains and updates agent state throughout its operational lifecycle.

Comprehensive Integration Framework

Universal Connectivity: Provides robust API support for seamless interaction with external tools and services, including Python and Typescript SDKs.

Flexible Architecture: Supports diverse AI frameworks and toolsets through modular design.

Standardized Protocols: Ensures consistent communication between agents and external systems.

The Future of Agent Runtimes

As AI agents grow more sophisticated, their infrastructure needs will evolve. We're betting on three trends:

Composable Environments: Agents that dynamically assemble their toolkits from shared components.

Cross-Agent Communication: Secure protocols for agent-to-agent collaboration.

Ethical Guardrails: Runtime-level enforcement of AI safety principles.

Start Building Smarter Agents

The definition of an AI agent and the requirements for effectively supporting it are evolving. A robust runtime with a dynamic lifecycle is more than an add-on; it is a foundational component that ensures AI agents can operate efficiently, adapt in real time, and scale seamlessly.

The new generation of AI agents needs more than inference and prompts—they need purpose-built infrastructure. Daytona's runtime handles the messy work of state, security, and scaling so you can focus on agent logic.